Loop like a native

Ned Batchelder

@nedbat

http://bit.ly/pyiter

tl;dl

Iteration is everywhere

Be direct

Write more abstractions

This talk is billed as Beginner, and sounds like a beginner topic, but I

prefer to think of it as Fundamental. Plenty of expert Python programmers

aren't making enough use of the tools I'm going to talk about.

Python has a nice model of abstract iteration which can be used to

increase the expressiveness of your programs. Python's iteration tools are

one of the most underused features of the language, especially by

programmers coming from other "similar" languages.

My goal here is to show Python iteration in a light that would encourage

programmers to explore more of its possibilities.

All programs iterate over data, of various sorts. When using iteration,

you should be as direct as you can, and you should use more abstractions to

make your loops as clear and direct as you can.

~ Iteration basics ~

Iteration

i = 0

while i < len(my_list):

v = my_list[i]

print v

i += 1

for i in range(len(my_list)):

v = my_list[i]

print v

for v in my_list:

print v

Let's say you have a list of numbers, and you want to print each of

them. One way to do it is shown in the first code sample: start a counter

at zero, and as long as the counter is less than the length of the list,

access that element of the list, and print it. Then increment the counter,

and continue on to the next element. Eventually, the while loop will run

over the whole list.

This while loop works, and is a least common denominator: this way of

thinking about the loop can be written in almost any language.

In Python, there's a simpler way to have a loop that ranges from zero to

N-1. You can use the idiom "for i in range(N)", as we see next. Here i

again takes on values starting with zero and going up to the last index of

my_list. This is the Python version of C or Javascript's classic for-loop

structure.

C programmers coming to Python often end here: they are used to a loop

that iterates over integers, and range() gives them a nice compact way to

do it.

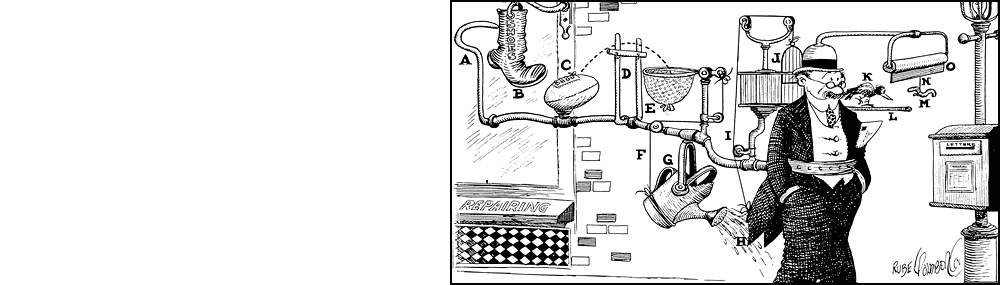

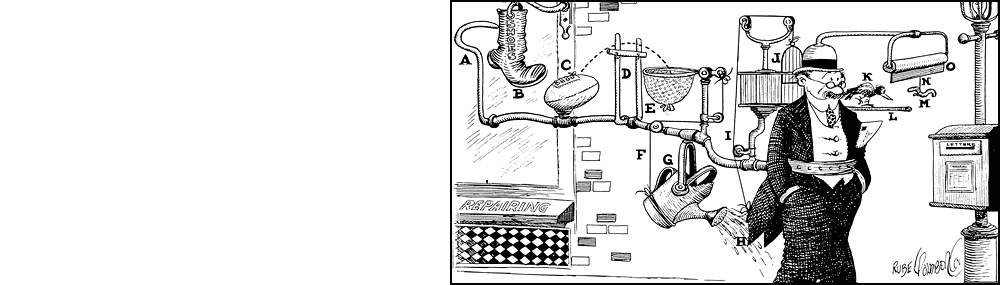

But Python gives us a much more natural way to loop over the values in

my_list. Why are we talking about integers? This is like a Rube Goldberg

machine. We set up a mechanism to give us integers, when we don't want the

integers at all, so the first thing we do with each integer is turn it into

a list element. We might has well have written, "the boot kicks the ball

into the net, which tips the watering can..."

Rather than iterating over indexes, and using the index i to

get the value we really want from the list, we can simply loop over the

values directly.

The last code sample shows the right way to write this loop. "for v in

my_list" gives us each value in my_list in the variable v, with no need to

fiddle around with the index i at all. What started as a five-line while

loop with two variables is now a two-line loop with only one.

The for loop

for name in iterable:

statements

Iterable produces a stream of values

Assign stream values to name

Execute statements once for each value in iterable

Iterable decides what values it produces

Lots of different things are iterable

The for loop is Python's versatile swiss-army-knife iteration tool. It

can iterate over all sorts of Python objects. Any Python object can be

"iterable," which means it can provide a stream of values. Any iterable

can be used in a for loop. The for loop extracts values from the stream,

assigns them to the name, and execute the statements in the body once for

each value.

On the face of it, this seems simple. But this simplicity provides

great power. The for loop can be used in all sorts of situations to iterate

all sorts of values. And you can often re-shape your iterations to use the

for loop more powerfully.

An iterable in Python is any value that can provide a stream of values.

In our first example, the list of numbers was the iterable, but there

are lots of other examples.

Lists ⇒ elements

for e in [1, 2, 3, 4]:

print e

1

2

3

4

There are many different iterable objects in Python, and each provides

its stream of values in its own way. As we've seen, if you iterate

a list, it will provide its elements in order.

Strings ⇒ characters

for c in "Hello":

print c

H

e

l

l

o

If you iterate a string, it will produce a stream of the characters in

the string.

Dicts ⇒ keys

d = { 'a': 1, 'b': 2, 'c': 3 }

for k in d:

print k

a

c

b

In surprising order!

# Also:

for v in d.itervalues():

for k,v in d.iteritems():

If you iterate a dictionary, it will give you its keys. This might be a

little surprising, what about the values? Dictionaries are best thought of

as a container of keys, where each key also has a value associated with it.

If you want, you can choose to use methods on the dictionary to iterate

over its values, or over its key/value pairs.

Notice that the keys appear in a surprising order. Dictionaries have no

inherent order. When iterated, they produce their keys in an arbitrary

order. Each iterable can decide the semantics of its sequence.

Dictionaries promise to give you all of the keys, with no extras, and no

duplicates, but make no guarantee about the order of the keys.

This demonstrates an important point: iterating a value doesn't mean you

can index into it. Iteration only knows how to get the next value, it

can't get the first, or the last, or the third-from-now.

Files ⇒ lines

with open("gettysburg.txt") as f:

for line in f:

print repr(line)

'Four-score and seven years ago,\n'

'our fathers brought forth on this continent\n'

'a new nation,\n'

'conceived in liberty,\n'

'and dedicated to the proposition\n'

'that all men are created equal.\n'

An open file is iterable, its values are strings, one for each line in

the file. This is extremely convenient, since this is often the way text

files are consumed, one line at a time.

In this example I've printed repr(line) so you can see that the lines

still have a newline at the end, every byte in the file is provided

somewhere.

Stdlib has interesting iterables

for match in re.finditer(pattern, string):

# once for each regex match...

for root, dirs, files in os.walk('/some/dir'):

# once for each sub-directory...

for num in itertools.count():

# once for each integer... Infinite!

from itertools import chain, repeat, cycle

seq = chain(repeat(17, 3), cycle(range(4)))

for num in seq:

# 17, 17, 17, 0, 1, 2, 3, 0, 1, 2, 3, 0, 1, 2, 3, ...

The standard library provides a number of other examples of iterable

values.

The re module searches for a regex pattern in a string. The finditer

function takes a pattern and a string, and iterates over all the places

that pattern matches that string. It's a good way to process a number of

pattern matches in a simple way.

In the os module, the walk function takes a directory name, and iterates

over all the subdirectories in that tree. This iterator is interesting

because it's working on a tree structure, but linearizing it by producing

subdirectories one after the other.

The itertools module provides dozens of tools for working with

iteration. The count() function produces integers forever. Of course

you'll need some logic to decide when to end.

The itertools module also provides tools for combining iterations. The

chain() function takes a number of sequences, and chains them together,

first producing all the values from the first sequence, then the values

from the second sequence, and so on. In this example, the first sequence

is repeat(17, 3) which simply produces 17 three times. cycle() takes its

sequence and cycles around it forever, so the sequence produced here is

infinite: 17, 17, 17, 0, 1, 2, 3, 0, 1, 2, 3, 0, 1, 2, 3, ...

These are just a few examples of using iterables to represent a variety

of kinds of iteration, and doing it naturally without resorting to index

variables.

Other uses for iterables

new_list = list(iterable)

results = [f(x) for x in iterable]

total = sum(iterable)

smallest = min(iterable)

largest = max(iterable)

combined = "".join(iterable)

In addition to provide lot of interesting iterable values, Python also

uses iterables in lots of places, not just in for loops. Here are a few

examples.

The list() function creates a list using the values it gets from its

iterable argument. Keep in mind, the argument can be any iterable.

list(my_dict) will produce a list of the dictionary's keys.

list(my_open_file) will produce a list of the lines of the file, and so

on.

List comprehensions are a concise way to create a list using a

computation on an iterable. They are very powerful, but are outside the

scope of this presentation. Here we make a list of the values returned by

f for each value in the iterable.

The sum() function takes an iterable, and returns the sum of all the

values it finds. The iterable has to be a stream of things that can be

added. The min() function takes an iterable of comparable values (such as

strings or numbers), and returns the smallest value in the stream. Of

course there is a max() function as well, which returns the largest

value.

The join method on a string takes a stream of strings, and joins them

all together into one long string.

These are just a few examples of using iterables outside of for loops,

as streams of values that can be passed to functions, computed with, and so

on.

~ Common questions ~

When learning about Python's iteration, there are common questions that

keep coming up. Let's look at a few.

Q: How do I get the index?

No:

for i in range(len(my_list)):

v = my_list[i]

print i, v

Yes:

for i, v in enumerate(my_list):

print i, v

OK, back to our list of numbers. We know now that we can iterate it

directly, without need for Rube Goldberg integers. But sometimes you

really need the index. Perhaps you just want to print the values with

their position.

Some will go back to the range(len()) twistiness, but there's a better

way.

The second code example shows how to use the enumerate() function to

get two values at each iteration: i is the index of the value, and v is

the value. Now we can print the value with its index, again without

resorting to iterating over integers.

A: enumerate() makes useful pairs

names = ["Eiffel Tower", "Empire State", "Sears Tower"]

list(enumerate(names))

[(0, 'Eiffel Tower'),

(1, 'Empire State'),

(2, 'Sears Tower')]

for num, name in enumerate(names):

print num, name

0 Eiffel Tower

1 Empire State

2 Sears Tower

In addition to looping over iterables, you can manipulate iterables to

produce new iterables that might be more convenient for you. The built-in

enumerate() function takes one iterable, and produces a stream of pairs.

Each value from the iterable is paired with a number, starting with

zero.

In our example, the list "names" is given to enumerate() which iterates

the list, giving the values "Eiffel Tower", "Empire State", "Sears Tower".

It pairs those with the number 0, 1, 2, to produce a stream of pairs.

In the first code sample, we use list() to make a list of all the values

enumerate() produces. This gives us a list of pairs.

We can use multiple-name assignment to get those pairs as two separate

values, as in the bottom example. The variables num and name are assigned

to the number of each name, and the name itself, from the list "names".

This is a convenient way to iterate over the values in a stream while also

using their index.

Iteration vs indexing

Limited:

for i in range(len(my_list)):

v = my_list[i] # indexing!

print i, v

More powerful:

for i, v in enumerate(iterable):

print i, v

for linenum, line in enumerate(f, start=1):

#...

An important advantage to the enumerate style of numbering: it works

with any iterable. When iterating over integers, you need the second Rube

Goldberg step of indexing into the iterable with the index. Many iterables

don't support this operation. You can't index an open file to get the

100th line, for example.

The enumerate() technique works with any iterable, and so is much more

powerful, in addition to being more compact and direct.

The other bad way

i = 0

for v in iterable:

print i, v

i += 1

Stay focused!

By the way, another common style of getting the index of an iterable is

shown here: keep a separate counter, and increment it to keep it in step

with the enumeration. This is just a goofy sidecar bolted on the side of

your loop. It works, but adds extra complexity. If the loop is more

complex than shown here, it is easy for it to become long enough that the

index increment gets lost. It's much better to keep the loop simple.

Fewer moving parts mean fewer bugs.

Q: How do I loop over two lists?

names = ["Eiffel Tower", "Empire State", "Sears Tower"]

heights = [324, 381, 442]

for i in range(len(names)):

name = names[i]

height = heights[i]

print "%s: %s meters" % (name, height)

Eiffel Tower: 324 meters

Empire State: 381 meters

Sears Tower: 442 meters

OK, so we don't need to iterate over integers just to number the items

in our iteration. The next challenge from the integer-lovers is, "how do I

loop over two lists at once?"

The integer way is to again loop over a range of integers, and then use

list indexing on the two lists to get the corresponding values.

A: zip() makes pair-wise loops

A pair of streams becomes a stream of pairs

for name, height in zip(names, heights):

print "%s: %s meters" % (name, height)

Eiffel Tower: 324 meters

Empire State: 381 meters

Sears Tower: 442 meters

But again, there's no need to resort to integers.

The zip() built-in function takes a pair of streams, and "zips" them

together to produce a stream of pairs. In this example, we have two lists:

"names" has names in it, and "heights" has heights that go with each name.

zip(names, heights) will produce a stream of pairs, each pairing a name

with the corresponding height. Using multiple assignment, we unpack the

pairs into the variables name and height.

Notice again that because we don't rely on list indexing, zip() can work

with arbitrary iterables, not just lists.

dict() accepts a stream of pairs

names = ["Eiffel Tower", "Empire State", "Sears Tower"]

heights = [324, 381, 442]

dict(zip(names, heights))

{'Empire State': 381,

'Sears Tower': 442,

'Eiffel Tower': 324}

Here's another example of the power of iterables. The dict() function

will accept a stream of key/value pairs, and construct a dictionary from

them. If we have two parallel lists, "names" containing strings, and

"heights" containing numbers, zip(names, heights) will produce a stream of

pairs, and dict() will use that stream, and produce exactly the dictionary

we want.

Powerful

tall_buildings = {

"Empire State": 381, "Sears Tower": 442,

"Burj Khalifa": 828, "Taipei 101": 509,

}

>>> print max(tall_buildings.values())

828

>>> print max(tall_buildings.items(), key=lambda b: b[1])

('Burj Khalifa', 828)

>>> print max(tall_buildings, key=tall_buildings.get)

'Burj Khalifa'

One last example of the power of iteration. Suppose we've made our

dictionary of buildings and their heights.

We can use a few different uses of the max() function to find the

greatest height, the name and height of the tallest landmark, or just the

name. A great deal of power can be packed into a concise expression.

Python's iteration primitives give you the power to loop over

tall_buildings in a single bound!

~ Customizing iteration ~

We've seen the power of Python's iteration primitives. Let's talk about

ways to customize your iterations.

Make your own iteration

nums = [88, 73, 92, 72, 40, 38, 25, 20, 90, 72]

for n in nums:

if n % 2 == 0:

do_something(n)

def evens(stream):

them = []

for n in stream:

if n % 2 == 0:

them.append(n)

return them

for n in evens(nums):

do_something(n)

Another style of indirect iteration is iterating over a sequence but

picking and choosing among the items. Here we have a list of numbers, and

we want to do_something() with the even ones. In first code example, we

loop over the numbers, and test each to see if it is even. Only if it is,

do we perform our action.

An alternative is to write a function that accepts a sequence, and

produces the new sequence we want. The evens() function does this. It

iterates over its argument, making a list of the even values. Then we can

use it in our for loop to iterate over the even numbers directly.

This example has a problem, namely, that is makes an actual list of the

even values. We'll see in a bit how to avoid this.

In this case, the test for an even number in the loop isn't burdensome,

but this is a toy example. If the test were more complicated, or if it had

to be performed in many places, it would be a clear win to abstract the

selection into a function like evens().

By writing evens(), we're abstracting our iteration, and creating new

sequences to iterate over.

Generators

Functions return one value — Generators produce a stream

def hello_world():

yield "Hello"

yield "world"

for x in hello_world():

print x

Hello

world

Generators are a way to create your own iterables by writing a

function.

A normal function returns one value, with the return statement. A

generator looks just like a function, but instead of a return statement, it

has one or more yield statements. Calling the generator function creates

an iterator, and iterating it runs the code in the function. Each yield

statement executed produces another value in the stream.

Here's a toy example of a generator, just containing two yield

statements. If you iterate it with a for loop, you can see that

hello_world() produced a stream of two values, "Hello", and "world".

Real generators are not this simple, typically the body of the function

would itself have a loop, with yield statements within it.

Evens generator

def evens(stream):

for n in stream:

if n % 2 == 0:

yield n

for n in evens(nums):

do_something(n)

We can re-write our evens() function as a generator. The code is almost

the same, but instead of building a list and returning it at the end of the

function, we simply yield the values as we find them.

We use it the same way: call evens(), passing it the iterable we want it

to work on, and it returns an iterator that will produce the even values

from it.

The old evens function made a list, and returned the entire list as its

only value. That meant that nothing would happen in the caller until

evens() had examined the entire iterable sequence and stored all the even

values. For small inputs, that might be fine.

Our evens() generator will yield the first even number it finds, letting

the caller do work before the whole sequence is examined. Note this means

it can work on indefinite or even infinite streams.

Generators have the advantage that they are lazy: they do no work until

the first value is requested, and they only do enough work to produce that

value. Work after that doesn't happen until the next value is requested.

This makes them use fewer resources, and usable on more kinds of

iterables.

Generators can be confusing. They look very similar to functions,

but have very important differences. First, calling the generator doesn't

immediately begin running the code in the generator, it simply creates

an iterator. When the for loop or other consumer of the iterator starts

pulling values from the stream, then the code in the generator begins

executing.

When a yield statement is encountered, execution is suspended, and the

value is used as the next value in the stream. With a regular function, a

return statement ends the function for good. But with a generator, the

current state of the execution is remembered. When the next value is

requested from the iterable, execution continues in the generator where it

left off, after the yield statement. The next value in the stream is

produced when a yield statement is executed again. All the local variables

in the generator retain their values for the whole course of the stream.

This makes it a very convenient way to write iterables.

The iterator finally ends when the generator returns, either with an

explicit return statement, or by executing the last statement in the

generator.

Abstracting your iteration

f = open("my_config.ini")

for line in f:

line = line.strip()

if line.startswith('#'):

# A comment line, skip it.

continue

if not line:

# A blank line, skip it.

continue

# An interesting line.

do_something(line)

With generators, we have a very powerful tool for abstracting iteration.

Just as functions are great for abstracting a series of statements, and

classes are great for abstracting a collection of data and the methods that

operate on it, generators are great for abstracting iteration.

As a real-world example, consider a program that reads a text file and

acts on the lines in it. Our file can have comments, which are lines

starting with '#', and can also have blank lines, both of which should be

ignored.

Here's a sketch of the program. We open the file, then loop over the lines

in the file. We strip the whitespace from the ends of the line, then consider

whether the line starts with '#'. If it does, we continue the loop, which skips

this line of text and goes on to the next line in the file. Similarly, if the

line is blank, we continue to the next line in the file.

Finally, if the line isn't a comment or blank, then we process it in whatever

way we like. When all of the logic is considered, this loop calls do_something()

on all of the non-blank non-comment lines from the file.

Your own generator

def interesting_lines(f):

for line in f:

line = line.strip()

if line.startswith('#'):

continue

if not line:

continue

yield line

with open("my_config.ini") as f:

for line in interesting_lines(f):

do_something(line)

with open("my_other.dat") as f2:

for line in interesting_lines(f2):

do_something_else(line)

Our line-processing program works just fine, but it mixed together two

concerns. The first is, what are the interesting lines in the file, and

which should be ignored? Second, what should we do with the interesting

lines? These two questions may have nothing to do with each other. You

may need to re-use the first, for example, in a larger program that

processes a few different kinds of text files.

Using a generator, we can split this loop into two parts. The first

part, which lines are interesting, can be implemented as a generator. Our

interesting_lines() generator takes an open file and loops over its lines.

It uses the same code as before to skip over comment or blank lines, but

then instead of processing the interesting line, it simply yields it.

Now we can use interesting_lines(f) in a for loop, and the loop will

only see the interesting lines, all the uninteresting stuff has been

skipped in the generator. This makes it easy for us to reuse the

line-skipping logic in other loops.

Notice that our generator interesting_lines() is actually a pure filter:

it accepts a line-producing iterable of any sort, it doesn't have to be an

open file. And it produces a stream of lines, by picking the ones it likes

from the input stream.

Notice also that we have re-thought our iteration: in the original code,

we were iterating over all the lines in the file, and "manually" skipping

over the uninteresting ones. With our new generator, we can think instead

of iterating over the interesting lines in the file. This lets us work at

a higher level of abstraction.

Q: How do I break out of two loops?

for row in range(height):

for col in range(width):

value = spreadsheet.get_value(col, row)

do_something(value)

if this_is_my_value(value):

break # ← ???

As a last example of rethinking iteration, consider looping over a

two-dimensional structure like a spreadsheet. A simple way to do it is to

use two nested loops: the first loops over the rows, and the second loops

over the columns. Together the two loop variables can be used to access a

cell in the spreadsheet.

There's a problem with this, though: suppose we are checking the cells

for some condition, and when we find the condition, we want to stop looking

through cells. There's no nice way to break out of two loops at once. The

break statement shown here will only break out of the column loop, and will

leave the row loop untouched, so the search will continue from the

beginning of the next row.

There are ways to write the code so that both loops will end, but they

are messy and confusing.

A: Make the double loop single

def range_2d(width, height):

"""Produce a stream of two-D coordinates."""

for y in range(height):

for x in range(width):

yield x, y

for col, row in range_2d(width, height):

value = spreadsheet.get_value(col, row)

do_something(value)

if this_is_my_value(value):

break

A more interesting solution is to change the iteration by abstracting

away the two-dimensional nature. Here we have a generator called

range_2d(): it takes a width and height, and produces a stream of pairs

(x,y) that range over the entire 2D space of coordinates. It does this

using the same double-nested loop we had before, but now the loop body

simply yields the tuple we need to represent the coordinates.

Our main loop now changes from a doubly-nested loop to a regular single

loop. Each iteration of range_2d() produces another coordinate pair, and

we can process the coordinates just as we did before, except now when we've

found the cell we want, we can just break out of the loop, and all is

well.

This is a more unusual loop change than we've seen before. Here we

changed a double loop into a single loop by re-thinking what we were

iterating over. This is a better loop because it matches our own way of

describing the loop. No one would describe looking for a value in a cell

by saying, "once for each row, look at every cell in the row," which is the

English equivalent of our double loop. Instead, you'd say, "look at every

cell in the spreadsheet," which is what our new loop says.

Our generator lets us define "look at every cell" abstractly so that our

main program can use the most expressive way to get the job done.

Better: iterate cells

for cell in spreadsheet.cells():

value = cell.get_value()

do_something(value)

if this_is_my_value(value):

break

An even better solution would be for the spreadsheet to have a .cells()

method that provided an iterator over all cells. Notice our range_2d

solution still meant talking about integers, though they were pairs of

integers.

Iterating over pairs of integers when we really want to be iterating

over cells is just another form of Rube Goldberg thinking. Much better is

to add custom methods to compound objects that let you iterate directly

over the values you're interested in.

~ Low-level iteration ~

Lower level

Iterable: produces an iterator

Iterator: produces a stream of values

iterator = iter(iterable) # iterable.__iter__()

value = next(iterator) # iterator.next() or .__next__()

value = next(iterator)

...

Only operation on iterators is next()

Before we talk about how to make your objects iterable, a quick word

about the low-level mechanics of iteration.

There are really two kinds of objects involved in iteration you need to

know about. We've been talking about iterables, which are objects that

consist of a number of values. They can't actually be looped over

directly, to do that, we ask an iterable for an iterator. You can think of

an iterable as an object with values in a sequence, and an iterator as the

current position within that sequence. For example, the pages in a book

are iterable, and the bookmark is the iterator.

The important operation on an iterable is iter(), which will return an

iterator. And the only operation available on an iterator is next(), which

either returns the next value, or raises StopIteration, a special exception

that means the iteration is finished.

Iterators having only one operation is important, because it means

Python's iteration mechanism can be applied to as broad a range of data as

possible. You can't ask if there will be a next value, you can't skip

ahead values, you can't rewind the stream, you can't ask what the last

value was, and so on and so on.

All you can do is ask for the next value, and you'll either get one, or

you'll get a StopIteration exception. Some iterables provide more

operations, but the only one that is guaranteed is next().

Low-level iteration

Sometimes helpful

with open("blah.dat") as f:

# Read the first line

header_line = next(f)

# Read the rest

for data_line in f:

# ...

With the low-level next() operation, we can use iterables in other ways

than just looping over them entirely. For example, with an open file,

we can read the first line with next(), then use a for loop to read the

rest of the file.

Notice the for loop doesn't re-read the first line in the file. An open

file object is an iterator, it knows where it is in the file, and pulling

values from it will give you the next line at the current position.

Making your object iterable

class ToDoList(object):

def __init__(self):

self.tasks = []

def __iter__(self):

return iter(self.tasks)

todo = ToDoList()

...

for task in todo:

# ...

The ultimate way to abstract your iterations is to make your own objects

iterable. By implementing the special method __iter__, your object is

iterable. All __iter__ has to do is return an iterator of some kind.

The simplest way to do that is to use the iter() function on some

collection you have. Here we have a self.tasks list, and our __iter__ can

simply return iter(self.tasks).

Once we do that, our object can be iterated just like any other Python

value. Here we use it in a for loop, but it can also be used in all the

other ways iterators can.

__iter__ generators

class ToDoList(object):

def __init__(self):

self.tasks = []

def __iter__(self):

for task in self.tasks:

if not task.done:

yield task

def all(self):

return iter(self.tasks)

def done(self):

return (t for t in self.tasks if t.done)

A more powerful way to write __iter__ is to use a generator. Remember

that calling a generator returns an iterator, just what __iter__ has to

do.

Here we've changed our to-do list to only produce the not-done tasks

when it's iterated.

Remember that your object can be iterable in more than one way. You can

have methods that produce iterators as well. Here our to-do list has an

.all() method that returns all the tasks, simply by returning an iterator

from the self.tasks list. The .done() method returns the done tasks, using

a feature we haven't seen yet, a generator expression.

I used three different techniques for these three iterator methods, just

as a demonstration of a range of possibilities. The power here is in

providing customized iteration using all the tools Python gives us.

~ Wrapping up ~

There is much more in this topic than can be covered in a short talk:

- The "iterator protocol" is the actual sequence of function and

method calls that happen to create and consume iterables. It can

be useful for advanced uses of iterables.

- When you define a class, you can make it iterable by defining

a __iter__() method.

- List comprehensions are a compact way to describe a list in terms

of a computation over another iterable. They can make your code very

concise.

- Generator expressions are a lot like list comprehensions, but

generate values on demand, like generators.

- itertools is a module in the Python standard library full of

functions for creating, manipulating, and consuming iterables.

sum(slides)

Iteration is everywhere

Python has a clean powerful model for iteration

Abstract and customize your iterations!

To summarize:

- Iteration is a fundamental tool in all programming.

- Python has a simple yet powerful model for iteration that is used

throughout the language and standard library.

- Using the tool that iterables provide, you can re-think your

iterations to make them clearer, more concise, and more powerful.

Once you start using these tools and ideas, you'll feel like you have

superpowers too!

Questions?

Ned Batchelder

http://bit.ly/pyiter

@nedbat